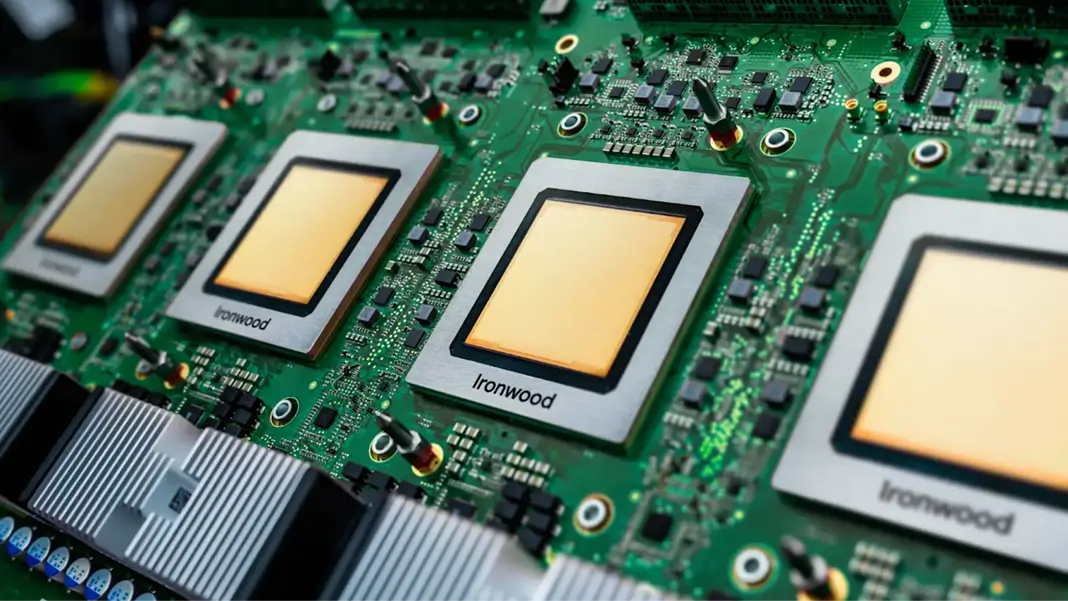

At Google Cloud Next 2025, Google unveiled Ironwood, its seventh-generation Tensor Processing Unit (TPU), marking a significant leap forward in AI inference technology. This new TPU is Google's most powerful and scalable custom AI accelerator to date, designed explicitly for inference workloads, signifying a strategic shift towards supporting the growing demands of generative AI and "thinking models."

For over a decade, TPUs have been the backbone of Google's AI infrastructure, powering both training and serving workloads. Ironwood builds upon this legacy, offering enhanced capabilities and energy efficiency to handle the complexities of modern AI models. This new generation of TPU is purpose-built to power inferential AI models at scale, enabling faster and more efficient processing of AI tasks.

Ironwood is designed to support the next phase of generative AI, addressing the substantial computational and communication demands of these advanced models. It achieves this by scaling up to 9,216 liquid-cooled chips interconnected through a high-bandwidth Inter-Chip Interconnect (ICI) network, consuming nearly 10 MW of power. This massive scale allows Ironwood to deliver 42.5 exaFLOPS of compute, surpassing the capabilities of the world's largest supercomputers. The architecture is a key component of Google Cloud's AI Hypercomputer, which optimizes both hardware and software to tackle demanding AI workloads.

One of the key features of Ironwood is its support for "thinking models," including Large Language Models (LLMs) and Mixture of Experts (MoEs). These models require massive parallel processing and efficient memory access, and Ironwood is designed to minimize data movement and latency during complex tensor manipulations. Ironwood also features enhanced SparseCore, increased High-Bandwidth Memory (HBM) capacity and bandwidth, and improved ICI networking.

Developers can leverage Google's Pathways software stack to harness the combined computing power of thousands of Ironwood TPUs. Pathways, developed by Google DeepMind, is a distributed runtime environment that enables dynamic scaling of inference workloads. It includes features like disaggregated serving, which allows independent scaling of the prefill and decode stages of inference, resulting in ultra-low latency and high throughput. Google Kubernetes Engine (GKE) also provides new inference capabilities, including GenAI-aware scaling and load balancing, which can reduce serving costs, decrease tail latency, and increase throughput.

The introduction of Ironwood also marks a broader shift in AI infrastructure development. It supports the transition from AI models that provide real-time information for human interpretation to AI systems that proactively generate insights and interpret data. This shift, dubbed the "age of inference," enables AI agents to retrieve and generate data collaboratively, delivering actionable insights and answers.

Compared to the previous generation, Trillium, Ironwood offers five times more peak compute capacity and six times the high-bandwidth memory capacity, while also being twice as power-efficient. This increase in performance and efficiency allows Google Cloud customers to tackle demanding AI workloads with greater speed and lower energy consumption.

With Ironwood, Google Cloud aims to provide its customers with a comprehensive AI-optimized platform that offers leading price, performance, and precision. This platform includes advanced infrastructure, world-class models, and a robust developer platform in Vertex AI, providing a comprehensive suite of tools for building multi-agent systems.