Meta is expanding its "Teen Accounts" system, initially launched on Instagram, to Facebook and Messenger. The move, announced April 8, 2025, aims to provide safer online experiences for younger users across Meta's platforms and give parents greater peace of mind. The expansion comes amid increasing scrutiny and growing legal pressure on social media companies regarding the potential risks their platforms pose to teens.

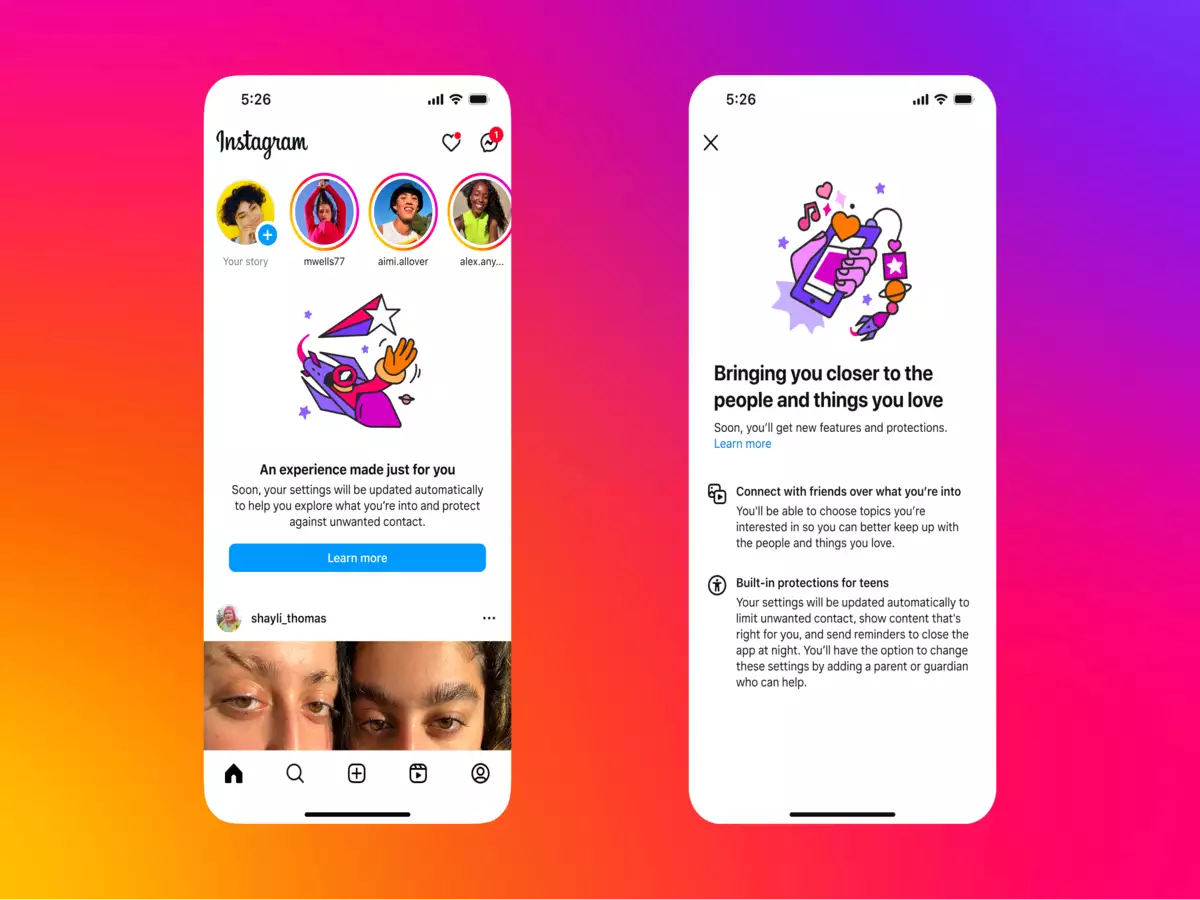

The core principle behind Teen Accounts is to provide built-in protections and stricter default settings for users under a certain age, typically 16, although some features extend to those under 18. These protections include limiting who can contact teens, restricting the type of content they see, and promoting healthier screen time habits. Meta emphasizes that these measures are designed to address parents' biggest concerns about their children's online safety.

Specifically, the Teen Accounts on Facebook and Messenger will include several key features:

- Privacy by Default: Teen accounts are automatically set to private, limiting who can view their posts, stories, reels, friend lists, and followed pages. New followers must request access.

- Messaging Restrictions: Teens can only receive messages from existing Facebook friends or connections (those they've messaged before). People with their phone number can send message requests.

- Content Filtering: More sensitive and potentially offensive content is automatically filtered out, ensuring teens see age-appropriate material.

- Limited Interactions: Teens need to approve tags in posts before the posts appear on their profile. The "Allow anyone to remix your future public reels" setting is turned off by default.

- Time Management Tools: Teens receive notifications reminding them to leave Facebook after 60 minutes of daily use. A "sleep mode" mutes notifications overnight, typically between 10 PM and 7 AM.

In addition to expanding Teen Accounts to Facebook and Messenger, Meta is also introducing new restrictions for Instagram Teen Accounts. Users under 16 will now require parental permission to go live on the platform. Furthermore, parental permission will be needed to disable the feature that blurs images containing suspected nudity in direct messages (DMs).

Meta initially launched its Teen Account program for Instagram in September 2024. According to Meta, 97% of teens aged 13-15 have kept the default restrictions in place. Since the introduction of Teen Accounts on Instagram, approximately 54 million accounts have been moved into the restrictive settings. A Meta-commissioned survey indicated that 94% of parents found the protections helpful.

The rollout of Teen Accounts on Facebook and Messenger will begin in the US, UK, Australia, and Canada, with plans to expand to other regions soon. The company notes that teens under 16 opening new accounts on Facebook and Messenger will automatically be placed into 'Teen Accounts,' while they'll require parental approval to relax any settings.

These changes come as major platforms like Meta, TikTok, and YouTube face increasing pressure from lawmakers, regulators, and advocacy groups to protect children online. The UK's Online Safety Act, for example, mandates tech companies to prevent or remove illegal content, including child abuse and harmful material.